Introduction

For a long

time I have been fascinated by walking robots, especially the awesome creations

from Boston Dynamics. Their 26km/h wildcat made the headlines last year. But

quadruped robots like that are something you can’t build by yourself. They

require a huge amount of money and programming skills. Or do they?

Ideas

Most four

legged robots that you can find on the internet use a spider-like leg

configuration, where the legs are symmetrical to the center of the robot. Although

this would be more stable and easier to program, I decided to build a

mammal-inspired robot. Mostly because I wanted a challenge and it leaves more

space for a proper design.

Electronics

The most

expensive parts of legged robots often are the servo motors. Most professional

robots use “smart servos” like Dynamixel or Herkulex. They are in about any way

better than regular servos (torque, speed, accuracy), but normally cost between

60$ and 500$ per piece. And we need twelve of them. For my robot I decided to

use Hobbyking servos instead, which are ridiculously cheap:

http://www.hobbyking.com/hobbyking/store/__16269__hk15138_standard_analog_servo_38g_4_3kg_0_17s.html

I paid around 40$ for them in

total. Despite the price, the quality is pretty good. They don’t have much

trouble lifting the robots weight.

As a servo

controller I wanted to use a Pololu Maestro at first, but had some problems

with it. It did not accept the power supply I was using, which led to servo

jittering and uncontrollable movement. Now I am using an Arduino MEGA, it has

enough pins to control all twelve servos on its own.

As a

battery for the servos I am currently using a 6.6V 3000mAh 20C LiFePo4 receiver

pack:

http://www.hobbyking.com/hobbyking/store/__23826__Turnigy_nano_tech_3000mAh_2S1P_20_40C_LiFePo4_Receiver_Pack_.html.

The Arduino is powered over USB by a Laptop or for untethered testing by a 5V 1A power bank.

http://www.hobbyking.com/hobbyking/store/__23826__Turnigy_nano_tech_3000mAh_2S1P_20_40C_LiFePo4_Receiver_Pack_.html.

The Arduino is powered over USB by a Laptop or for untethered testing by a 5V 1A power bank.

Because the

robots will not be autonomous for the time being, it needs a remote of some

sort. I decided to write an Android app and plug a Bluetooth module into the

Arduino.

In a later

update I added an ultrasonic distance sensor to the robots head, which can be

rotated using a small servo. This way, the robot can scan the environment and could

detect if it will run into a wall.

Mechanical Design

To connect servos

and electronics some kind of chassis is needed. At first I thought about

3d-printing one, but that would break the budget as I don’t have my own printer

and would have to order all the parts. That meant I needed a 2D-like design.

Wood as a material does not look professional enough and aluminum is too heavy,

so I went with acrylic glass.

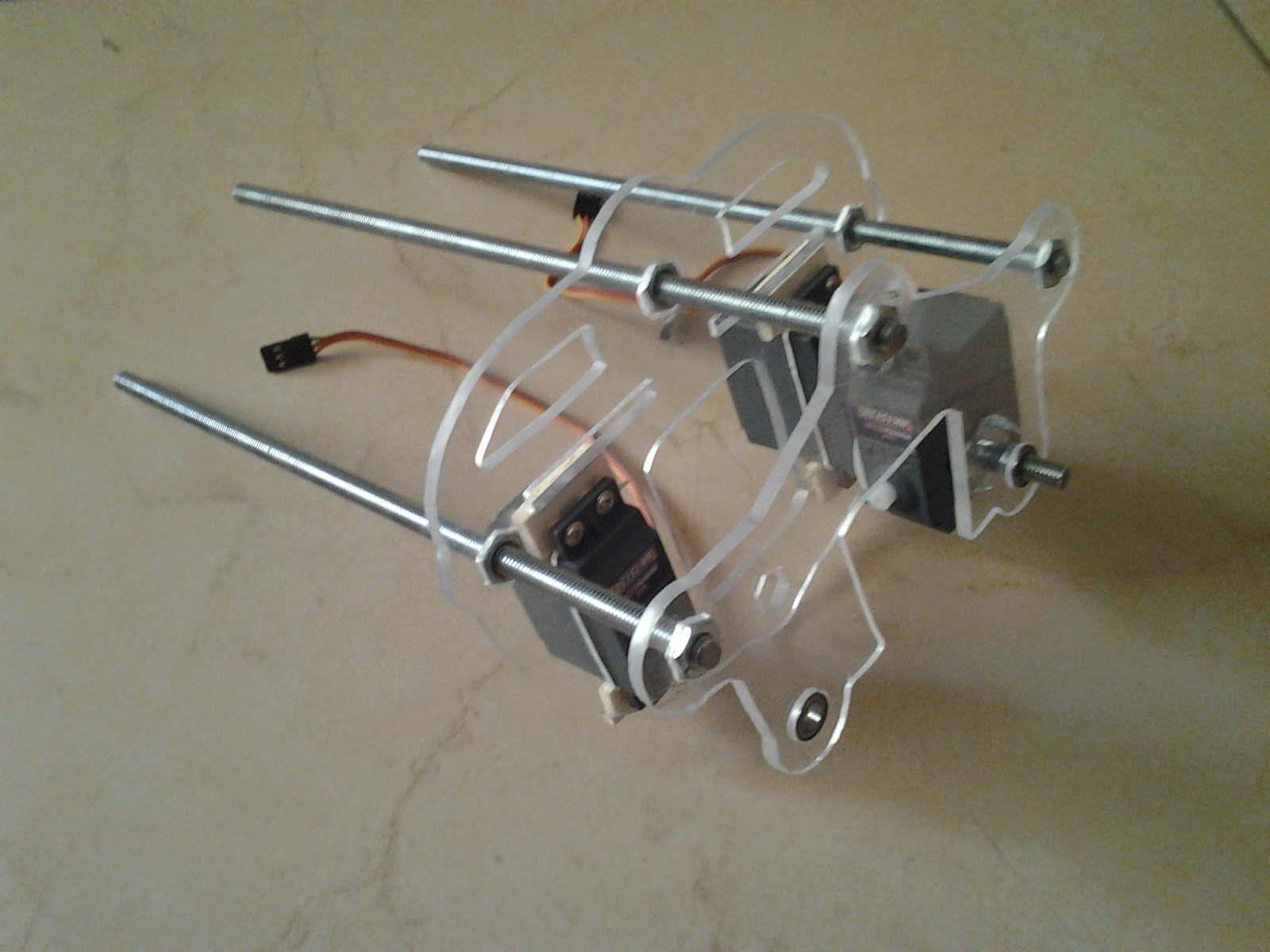

Four

acrylic plates (5mm) form the robot's body. They are connected by nuts on

threaded rods, which are covered in aluminum tubes. The inner plates are

rounded and have foam on the sides to protect the robot when falling over. The

outer plates hold the counter bearings, which are made of a t-nut, a piece of

threaded rod and a small ball bearing. Another horizontal plate serves as a

mount for both the Arduino and the battery. Upper and lower legs are connected

by acrylic parts, also using ball bearings for the joints. On the lower legs I

had to improvise: They are made of cheap camera tripod legs, secured with cable

ties. The rubber ends ensure good grip.

Every robot

needs a head, so I added that later. The ultrasonic sensor serves as the robots

eyes.

Hand made prototype:

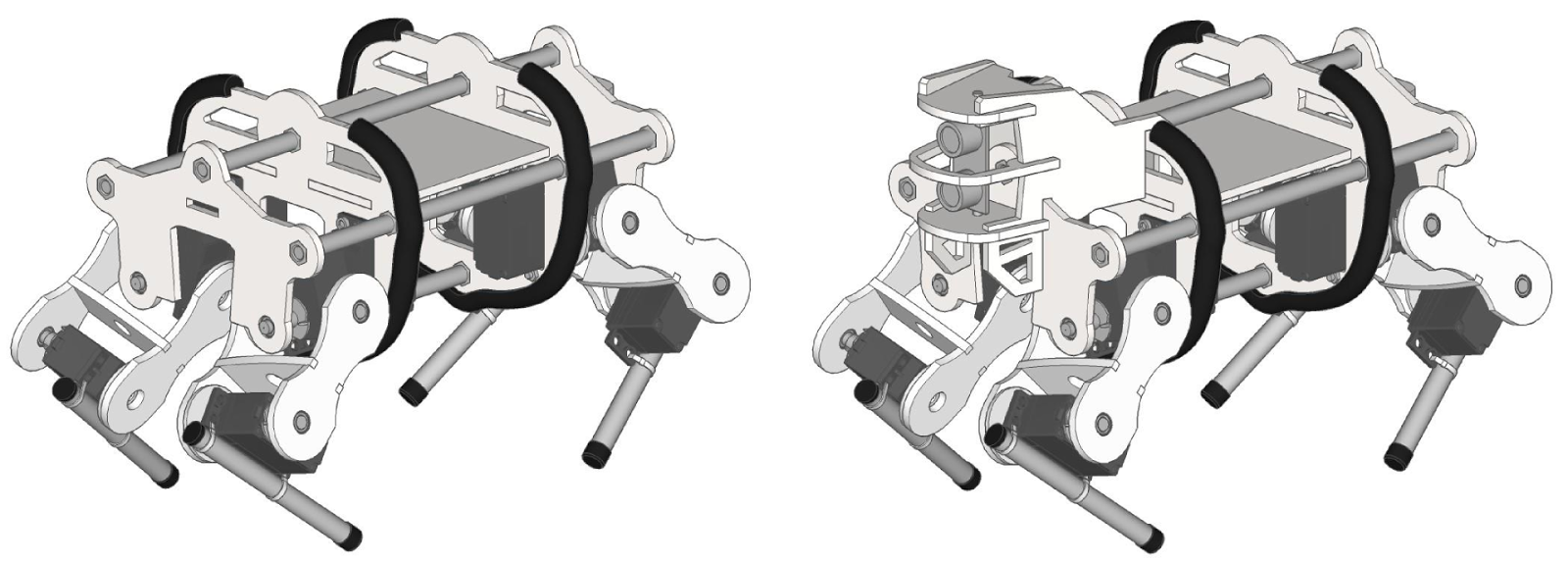

Laser cut parts from Formulor:

The finished robot:

Cat for scale:

Everything

from the first sketches to the final design was done in Sketchup. At first I

made some prototype parts using a fretsaw, which took me hours. Later I decided

to order the parts online and came across Formulor, which offers cheap laser

cut parts and quick shipping. The precision is impressive, even the edgy curves

caused by Sketchup are visible. I somehow underestimated the stability of 5mm

acrylic glass, 3mm should be enough in order to save weight.

The unpowered

robot would fall over immediately, so I made a simple stand for it using a

three-legged stool. Two strings on the front and back of the robot can be attached

to a plastic rod on top of it.

If you want to build the robot yourself, I am providing templates for the mechanical parts:

https://www.dropbox.com/s/qaavo54booxvpmb/Quadruped%20templates.zip?dl=0

Arduino Sketch

The Arduino

sketch is divided into multiple files (tabs): Main, IK, Gait, Serial and Servo.

The main

file calls the other functions and initializes most of the variables.

To move the

robots feet you need to calculate the inverse kinematics for each leg. It is

basically an equation where you input coordinates and get servo angles as a

result. You can either use simple school math or search the internet for

solutions. Trying the calculation in Excel before flashing it to an Arduino can

save a lot of time. After this is implemented, the robot can move it's body precisely

along the XYZ-coordinates. To rotate the robot you need a rotation matrix. This

is another system of equations where you input a coordinate and values for

roll, pitch and yaw and get the rotated coordinate as the output. I found this

blog post by Oscar Liang really helpful: http://blog.oscarliang.net/inverse-kinematics-implementation-hexapod-robots/.

I made an Excel sheet for both calculations:

I made an Excel sheet for both calculations:

The serial

tab manages the incoming data from the Bluetooth module and the ultrasonic

sensor. This works the same way as receiving chars in the serial monitor of the

Arduino IDE.

Servos are

controlled directly using the servo library.

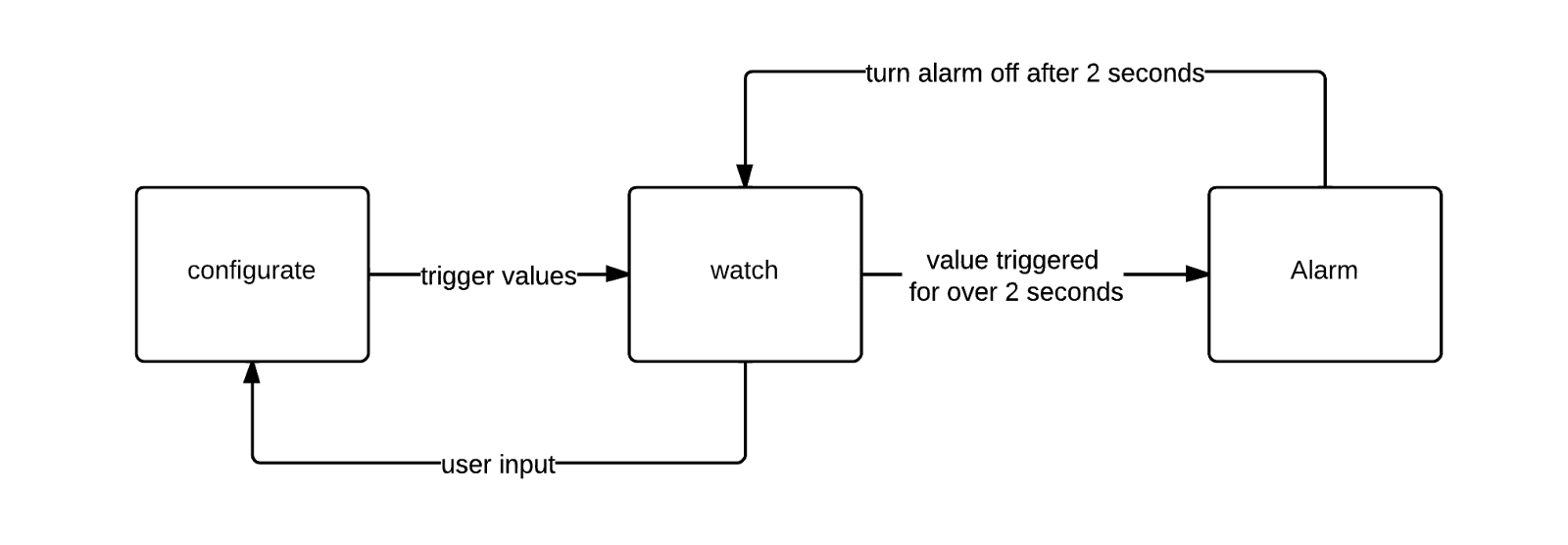

The entire

program works like this: At first the Bluetooth function gets called to look

for new input. Then the right gait function is selected. It calculates the

coordinates for every leg. After that the rotation and translation is calculated.

To get the right servo angles the coordinates have to be converted from “full

body” to “single leg”, then the inverse kinematics function can work its magic.

Finally the servos are moved to the calculated angle.

Walking Gait

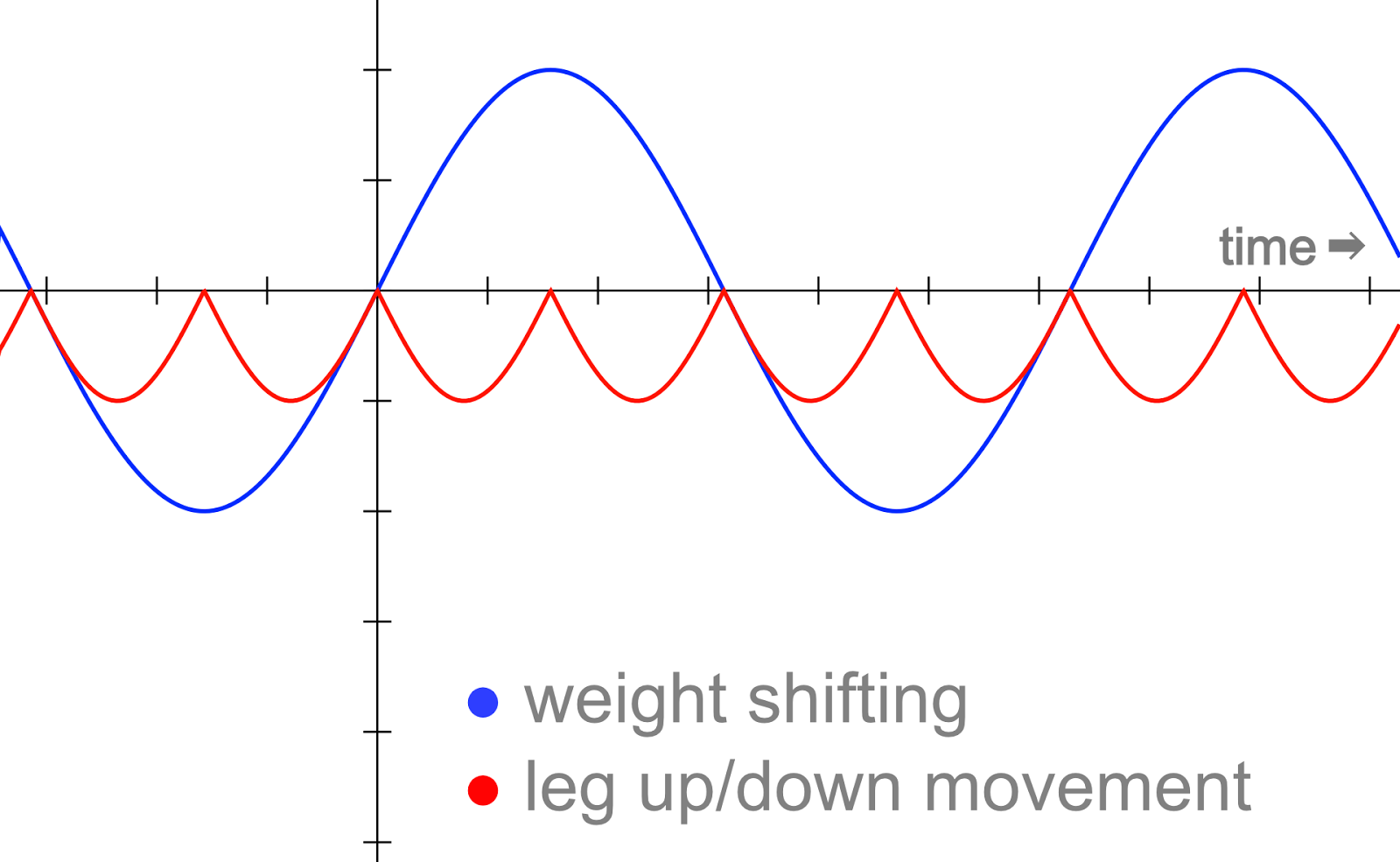

Gaits are probably

the most complicated thing about legged robots. Because I wanted quick results

I made a simple walking gait based on sine functions. As you can see in the

diagram each leg is lifted after the other using a function (red) like -|sin(x)|.

When one leg is in the air, the robot will tend to fall over. Therefor a second

function (blue) moves the body away from the lifted leg. The advantage of this

gait is that you have fluid movements. But the robot can only move in one

direction.

I tried to

program a trot-gait later, but the rapid leg movement is too much for the

servos and the frame.

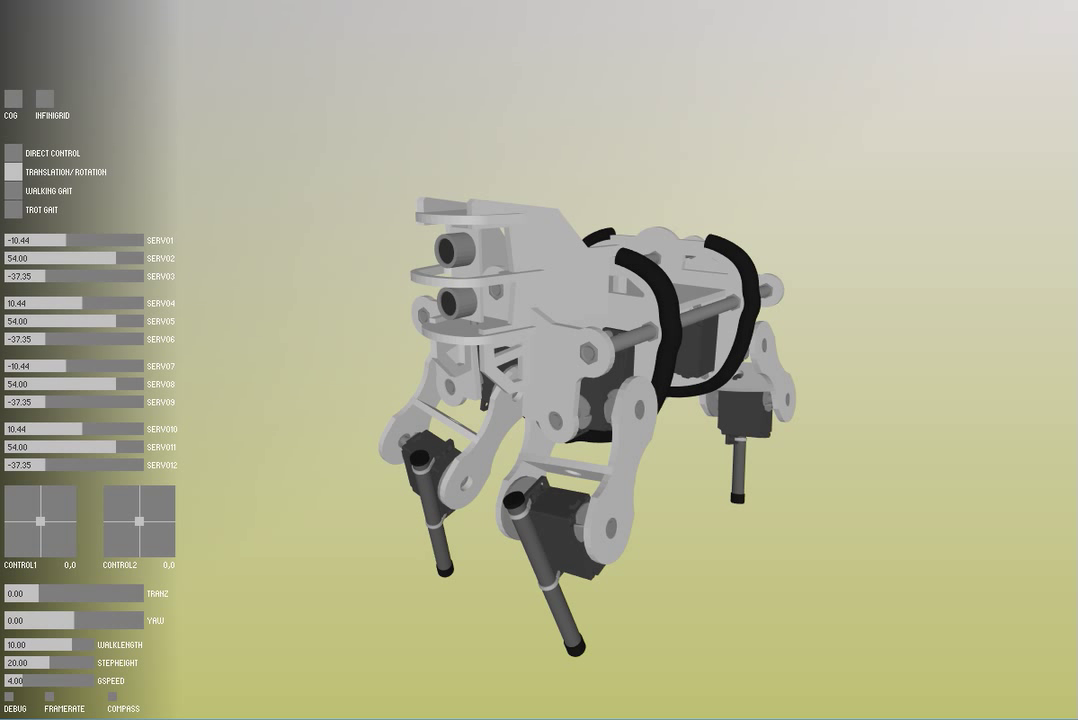

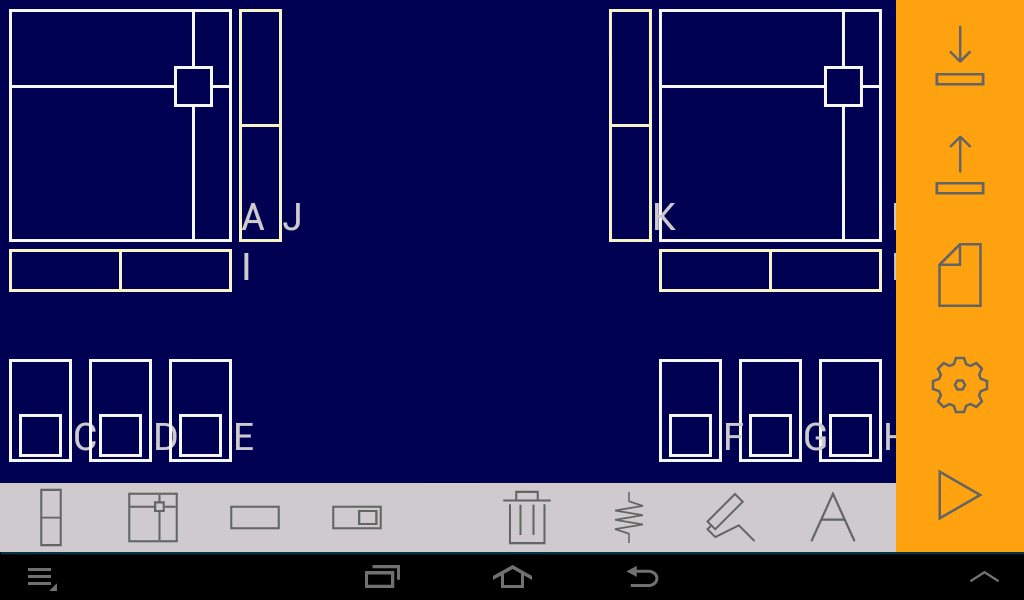

Processing Simulator

Figuring out

the gaits is a tedious task. Even the smallest change to the code requires you

to flash the Arduino, plug the battery back in and lift the robot out of it's

stand. If you make a mistake, the robot will fall over or the servos will move

in the wrong direction, eventually destroying themselves or other mechanical

parts. After this happened to me a few times I began to search for an easier way

of testing. There are some robotics simulators (e.g. Gazebo, v-rep) but I found

them too complicated, importing the robot’s geometry alone took me forever.

I ended up

writing my own simulator. Processing is the perfect choice for this. It has

libraries for graphical user interfaces and can import and render different cad

files. The best thing is that you can practically drag and drop Arduino code

into Processing (This is because Arduino code was originally based on Processing).

There are a few minor differences, for example arrays are initialized differently.

But if you can avoid that you can simulate the exact behavior of the robot and

copy the working code back to the Arduino.

Compiling the Processing sketch only takes around 5 seconds.

Compiling the Processing sketch only takes around 5 seconds.

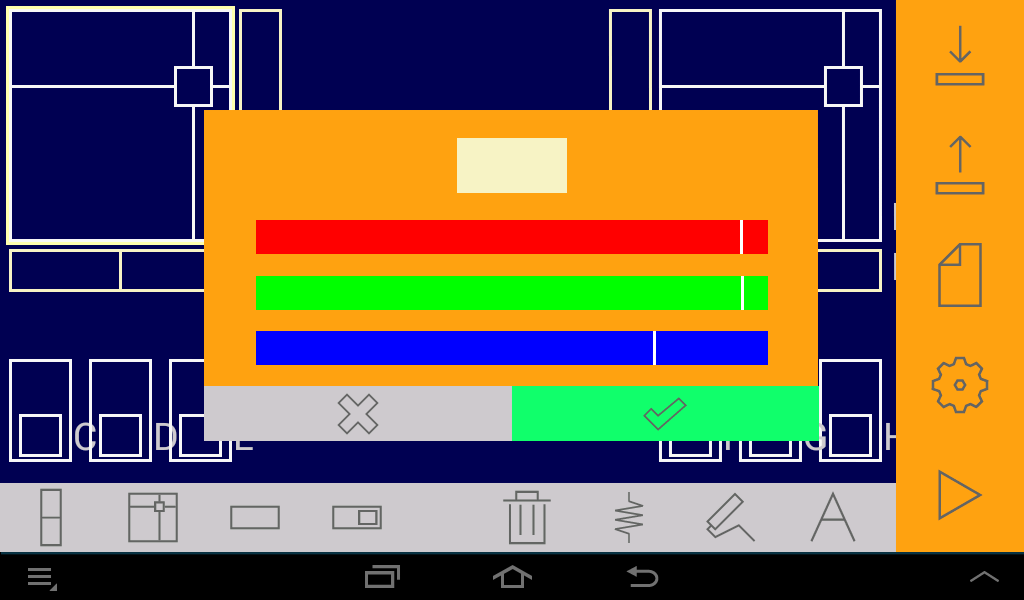

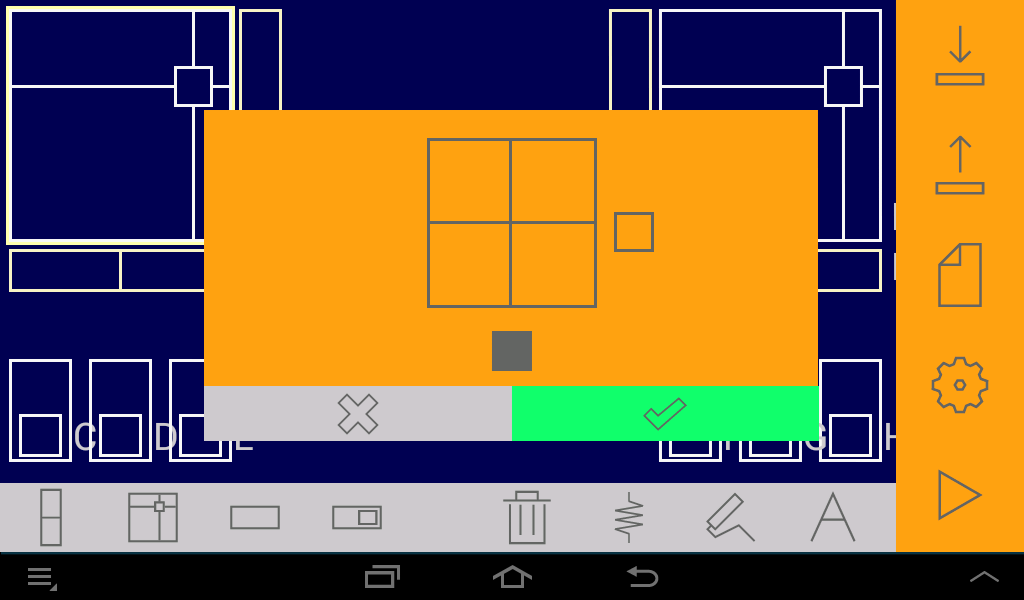

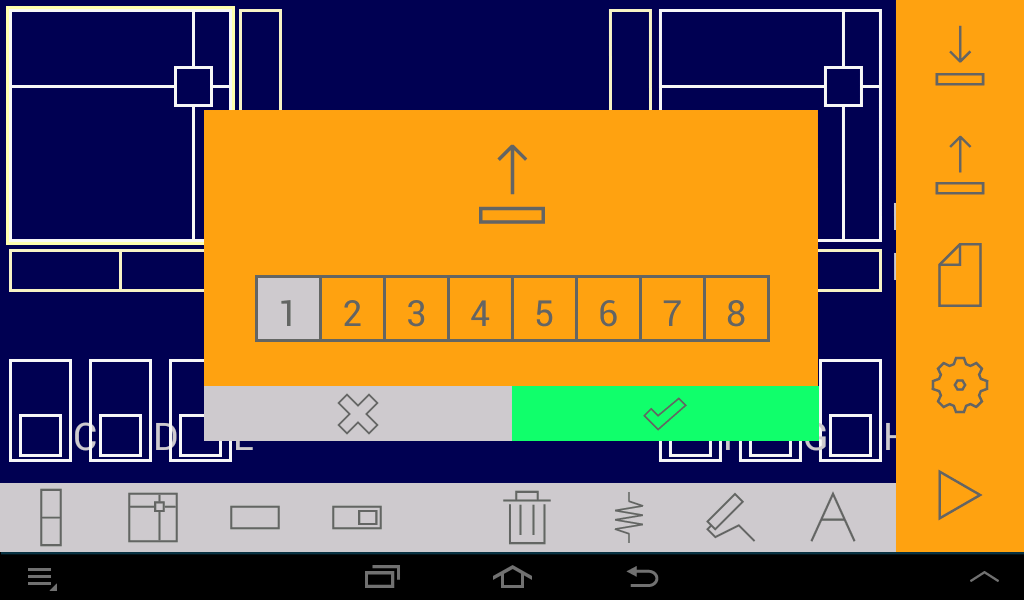

The final

simulator version can display the robot in color, has a user interface with

buttons and sliders, there are different modes for manual servo control and

moving the robot around different axis (I even found a bug in my IK code this

way).

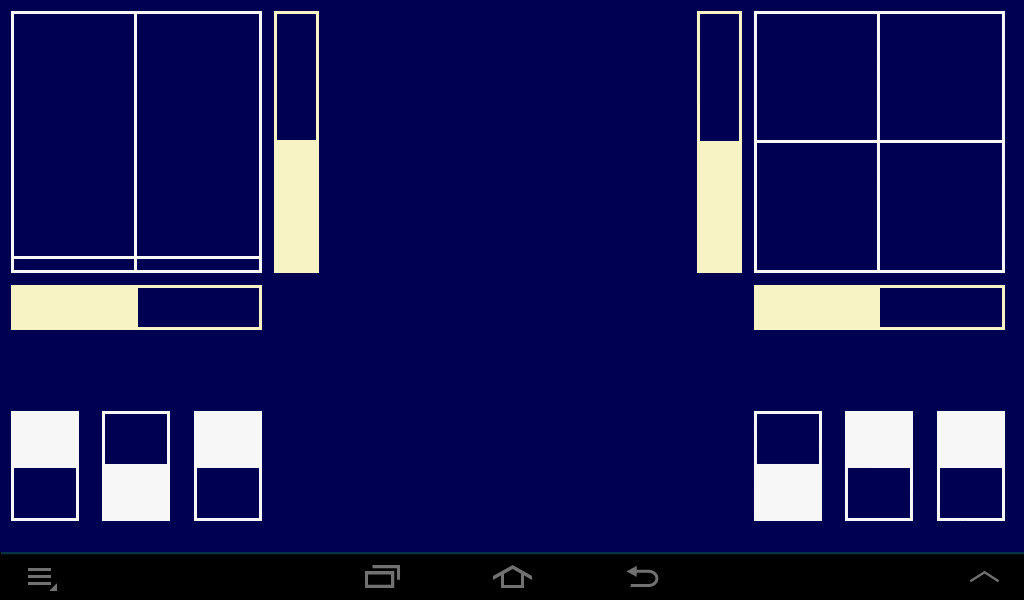

Android App

I needed a

quick way to change variables on the Arduino without flashing it. Therefore I

bought a Bluetooth module, but couldn’t find the right Android app. So I had to

write my own app using Processing for Android. This was basically the same app

I posted an article about last year: http://coretechrobotics.blogspot.com/2013/12/controlling-arduino-with-android-device.html

The only difference

is an additional sonar to display the values of the ultrasonic sensor.

Conclusion

Considering

the low budget I am pretty happy with the robot so far. The stability and servo

power is acceptable. I am already working on more advanced gaits, but even with

the simulator this is a time consuming process.

.jpg)

.jpg)